In this constantly evolving tech landscape, artificial intelligence („AI”) is also transforming the employment scene, redefining roles and interactions within the workplace. As algorithms and human expertise join forces, AI boosts its efficiency and the level of precision, while human intuition still thrives in uncertain situations. The result? A dynamic, hybrid workforce where cutting-edge technology and human insight work hand in hand, driving productivity and also shaping the future of work.

This is the second part, realized by bpv GRIGORESCU STEFANICA lawyers, Diana Ciubotaru (Associate) and Silvana Curteanu (Associate), on the AI & GDPR interplay, where we delve deep into the most significant topics concerning the AI & GDPR combo within the employment relationships and pointing out the requirements incumbent on the employer as a data controller and deployer[1] when using AI tools.

Don’t forget to also check the first part of this article, where we assessed the data protection background and its algorithmic readiness by overviewing the main relevant GDPR provisions related to the development and deployment of AI systems and how the AI Act impacts the GDPR’s rules.

GDPR, AI and WoW (the World of Work)

(i) The first steps and the compliance concerns

AI systems in recruitment and human resources (”HR”) processes offer significant benefits, such as accelerating the process of recruitment and hiring and improving candidate communication. Despite these advantages, the human-oriented field of employment brings a certain degree of reluctance to fully rely on using AI systems for recruitment processes from start to finish. Recent developments in AI include tools like virtual assistants, which can source resumes, contact candidates, and conduct interviews using machine learning (ML) and platforms such as VaaS (Voice as a System). A survey[2] shows that 62% of HR professionals anticipate certain recruitment stages to be fully automated by AI (e.g., candidate application and selection for the relevant position).

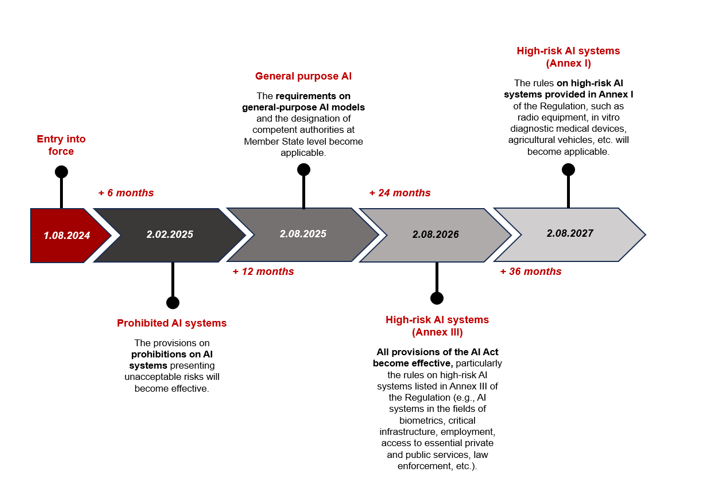

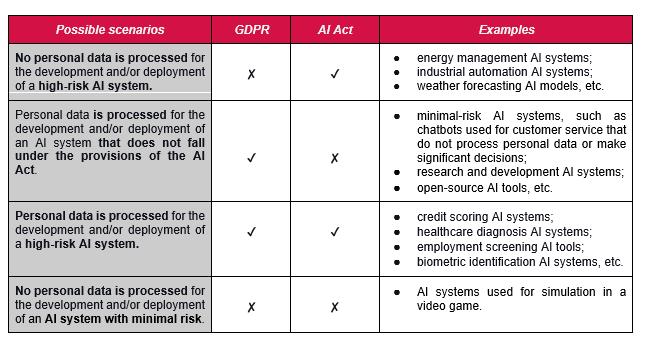

While AI tools can streamline tasks and provide data-driven insights, they may also raise compliance concerns, particularly under the European Regulation on Artificial Intelligence („AI Act”). Under this recent and highly debated regulation, AI systems used in employment decisions (e.g., AI platforms making employment decisions on task allocation, promotion and termination of employment relationships, AI tools used for monitoring or evaluating the employees and their performance) are classified as high-risk AI systems. In this context, the compliance concern remains: how can an employer benefit from all the AI-based tools and facilities while still being GDPR compliant?

So, let’s shed some light on certain practical steps to be followed by employers when using AI tools or when acting as AI deployers.

(ii) Practical steps for employers

Regardless of the purposes for which the AI tools are used, there are no exceptions from the GDPR requirements for technology enthusiast employers. Here’s a breakdown of the key actions that may contribute to data privacy compliance:

1. Identify and document a lawful basis for processing

Firstly, the employer must identify the appropriate legal basis (as provided by Art. 6 of the GDPR). Generally, for processing employees’ data, the most common lawful bases may include:

▸ Execution of a contract: may be applicable when AI tools are used to manage certain aspects related to employment relationships (g., AI-driven payroll systems that automate salary calculations, deductions, and benefits management, AI tools that assess employee performance metrics to ensure they meet the requirements and obligations outlined in their employment contracts, such as achieving certain productivity targets, etc.).

▸ Compliance with a legal obligation of the employer: for example, complying with occupational health and safety regulations to ensure a safe working environment. AI-based tools can help evaluate compliance and alert employers and management when a safety breach occurs (g., identifying employees not wearing protective gear when it is mandatory, etc.).

▸ Legitimate interest: it is often used, but it must always involve the performance of the “balancing test” to analyze if the employer’s interests override the rights and freedoms of the employees.

▸ Consent: due to the power imbalances between employers and employees, relying on this legal basis is tricky in employment contexts, but it may apply in case of the voluntary participation of the employees in optional programs within the company (g., wellness or mental health programs that use AI tools to provide personalized support or recommendations, such as fitness apps or stress management tools, AI-based tools that analyze employee behavior for providing personalized feedback, coaching, or career development plans, etc.).

In such cases, the employer must ensure that the employees have the possibility to withdraw their consent for such processing without facing negative consequences.

Related to the manner of the documentation of the lawful basis chosen for carrying out the processing activities, employers should clearly record (in a record of the processing activities) the lawful basis for each processing activity to hold evidence in this regard.

2. Conduct a Data Protection Impact Assessment (DPIA)

▸ Targeting a purpose: after establishing the concrete purpose of the processing, by conducting a DPIA, the employer can assess the potential risks associated with processing personal data using AI-based tools in day-to-day activities.

▸ When to conduct it: the DPIA must be conducted before implementing or using the AI-based solutions, especially in scenarios in which the processing involves systematic monitoring, large-scale data, or sensitive data (g., biometrics or health data).

▸ The key elements to be included in the DPIA are:

– the description of the processing activities object to analysis.

– the assessment of the necessity and proportionality of carrying out the activities.

– the evaluation of risks to the individuals’ rights and freedoms.

– the measures to mitigate risks implemented by the employer.

The mirror image of DPIA regarding AI rules is represented by the Fundamental Rights Impact Assessment (FRIA) regulated by Art. 27 of the AI Act. FRIA needs to be conducted before deploying a high-risk AI system by the deployers that are bodies governed by public law or are private entities providing public services (e.g., private hospitals or clinics providing public health services on the basis of public-private partnerships, bus, tram or metro operators operating on the basis of a concession contract with public authorities, etc.).Similarly to DPIA-related requirements, art. 27 of the AI Act provides the mandatory elements that a FRIA must include.

3. Ensuring transparency by providing clear and complex information to employees

In practice, the transparency principle is effectively implemented by data controllers by providing data subjects privacy notices that include the information stipulated by Art. 13 and 14 of the GDPR. When it comes to using AI solutions, any employer should ensure that these privacy notices also refer to the mere interaction with an AI system while allowing the data subjects (i.e., the employees) to understand, as the case may be, how the AI systems make decisions about them, how their data are used to test and/or train a certain system and the eventual outcome of such AI-powered processing. Broadly, in the privacy notices, it is essential to address, among others, the following:

– what data is collected;

– the fact that the employees shall interact with an AI system (mandatory in case of high-risk AI systems);

– how data is processed using AI-based tools;

– what is the purpose of the processing activities;

– the rights of employees regarding AI-based processing.

The employer must ensure that the elements above are explained in a simple and comprehensive manner, especially considering any automated decision-making and profiling that may impact the employment relationship.

4. Ensure data minimization and purpose limitation principle

Regardless of deploying or simply using AI-based tools, employers must effectively respect the principles of data minimization and purpose limitations provided by the GDPR. In this regard, employers must:

▸ Limit data collection: only collect the data that is necessary for the specific purposes for which the AI-based tool is used or deployed within the company.

▸ Explicitly communicate the specific purposes: clearly define and communicate to data subjects (e., any person within the company) the purposes for which data will be used and ensure AI systems are not repurposing data beyond the initial intentions.

5. Implement robust security measures

Similar to the GDPR’s requirements, given that the use of AI-based tools within employment relationships involves the processing of employees’ personal data, employers must also implement efficient security measures. This may include:

▸ Technical safeguards: frequently used technical and organizational measures are data encryption, use of access controls, and employing secure storage solutions.

▸ Conducting regular security assessments: for example, regularly auditing AI systems (deployed or used) to ensure they are secure and identify any potential vulnerabilities.

▸ Implement a security incident response plan: employers should draft an internal policy or a protocol for responding to data breaches, including how to notify affected employees and relevant authorities in such scenarios.

6. Paying increased attention to automated individual decision-making and profiling

In this case, every employer using AI-based tools within its relationships with employees must address these specific issues which constantly raise problems for data subjects (i.e., the employees). Thus, it is important to:

▸ Ensure human oversight: implement the appropriate measures so that decisions impacting employees are not solely adopted automatically following the results generated by the AI tool and that human review is provided.

▸ Properly inform the employees: if using AI tools for automated individual decision-making (g., hiring decisions, which automatically evaluate the employees, automatically allocating tasks based on individual behavior, personal traits or characteristics), employees have the right to be informed of how the decisions are made.

▸ Make sure the employees know their rights: employees must be informed about their right to object to automated individual decision-making[3] or profiling[4] and how to challenge such decisions (Art. 21 and Art. 22 of the GDPR are relevant in this case). You can find out more about automated individual decision-making and profiling in relation to this matter in Part III of our article.

7. Check the AI contractors carefully and conduct due diligence

These preventive measures may include the following:

▸ Assess third-party providers/contractors: employers should ensure that AI vendors comply with data protection regulations and have implemented appropriate security measures in their AI solutions.

▸ Concluding data processing agreements (DPAs): employers should sign contracts with vendors that include data protection clauses specifying the roles and responsibilities of each party under the specific conditions laid down by Art. 28 of the GDPR.

▸ Conducting regular audits: employers should monitor third-party compliance, especially with regard to cloud-based AI solutions.

8. Ensure data accuracy and fairness principles

The GDPR principle of data accuracy must be observed when it comes to personal data used as an input for AI systems, especially considering the potentially harmful outcomes of training AI with inaccurate data by singling out people “in a discriminatory or otherwise incorrect or unjust manner”[5]. Therefore, even if employers act as AI-systems deployers or simply as users of AI-based solutions, employers should:

▸ Conduct regular data quality checks: respectively, if the data used or introduced in the AI models is accurate, up-to-date, and relevant for the purposes pursued.

▸ Conduct audits to identify potential biases: employers should evaluate AI systems for potential biases that may occur in decision-making processes (g., in the context of hiring – there may be AI-based recruitment tools, which may generate biases against women).

▸ Propose corrective measures, if necessary: implementing mechanisms to correct any biases or inaccuracies identified during the audits.

9. Organize trainings for instructing employees and managers

To keep up with technological developments while acting in full compliance with applicable legal provisions, the investment in human resources and know-how within a company is paramount. Thus, among others, employers should train their employees on:

▸ AI Systems: they should ensure that staff, especially those involved in managing AI tools, understand their responsibilities regarding data privacy.

▸ Data protection awareness: employers should train staff on the effective implementation and application of the GDPR principles (or local data protection laws), such as the fulfillment of data minimization principle, purpose limitation, and lawful processing.

10. Constantly review and update data protection policies

Some of the most common practices that may help employers comply with data protection principles and rules when using (or even deploying) AI-based solutions or tools can include:

▸ Regular policies review: periodically update the internal data protection policies to account for changes in AI technology or regulatory requirements.

▸ Proper documentation: this can consist of keeping records of policy changes and ensuring they are accessible to employees.

▸ Conducting internal audits: conduct regular internal audits to ensure compliance with data protection policies and practices can be a business-saving solution.

11. Prioritizing and always respecting employees’ rights

When it comes to data subjects’ rights (i.e., the employees), employers, as data controllers, must:

▸ Enable access, rectification, and erasure of data: employers must ensure that employees can access their data, request corrections, or ask for data to be deleted.

▸ Data portability: if relevant and upon request, employers must provide employees with their data in a structured, commonly used and machine-readable format.

▸ Respond to all justified requests: employers must establish and implement a process for responding promptly to data access or deletion requests from employees or any type of request submitted by employees under the GDPR’s provisions.

(iii) The conclusion?

As can be observed, using AI-based platforms or tools is undoubtedly a powerful asset, but only if used responsibly. By implementing transparent policies, prioritizing data minimizations, and embracing a privacy-by-design approach, employers can turn the use of AI into a robust ally in their compliance journey, boosting at the same time the efficiency of various tasks and interactions conducted by employees.

Stay tuned for the third and last part of our article!

Remember to subscribe to our newsletter to stay updated on the latest legal developments.

[1] „Deployer” – means a natural or legal person, public authority, agency or other body using an AI system under its authority except where the AI system is used in the course of a personal non-professional activity.

[2] https://www.tidio.com/blog/ai-recruitment/

[3] Decisions made about individuals solely by automated means, without any human involvement. This typically involves the use of algorithms or artificial intelligence (AI) to process personal data and make decisions based on that data.

[4] The automated processing of personal data to assess or predict various characteristics of an individual. The goal is often to categorize people based on specific traits or behaviors, allowing organizations to make decisions or target individuals in specific ways (e.g., regarding the work performance, economic situation, health, behavior, interests, location).

[5] Recital (59) of the AI Act